Memory in Context (Kickoff Session)

The topic of this year's NABI symposium, we explore how hippocampal circuits reveal the principles behind flexible, context-dependent memory.

We explored memory in context, the theme of this year’s NABI symposium. Contrary to older views, hippocampal memory is not passive—it actively infers, interprets, and structures experience.

From Cognitive Maps to Predictive Maps

- The hippocampus encodes possible future states as a cognitive map.

- Maps are useful only if they can guide future actions—hence the link to reinforcement learning and Bellman’s equation.

- Successor Representation (SR) is a way to encode such as predictive map for RL.

- Provides a predictive map: is the expected future occupancy of state from .

- Place cells encode the probability of being at future locations, not just the present.

- Grid cells emerge as the eigenvectors (basis functions) of the SR, supporting low-dimensional spatial coding.

Limitations of Predictive Maps

- SR tracks locations but not entities (“who/what is where?”).

- Lacks one-shot generalization: animals instantly reuse new rules, but SR needs many trials to adapt.

- Can’t explain the diversity of hippocampal cell types (border, band, object-vector cells, etc.).

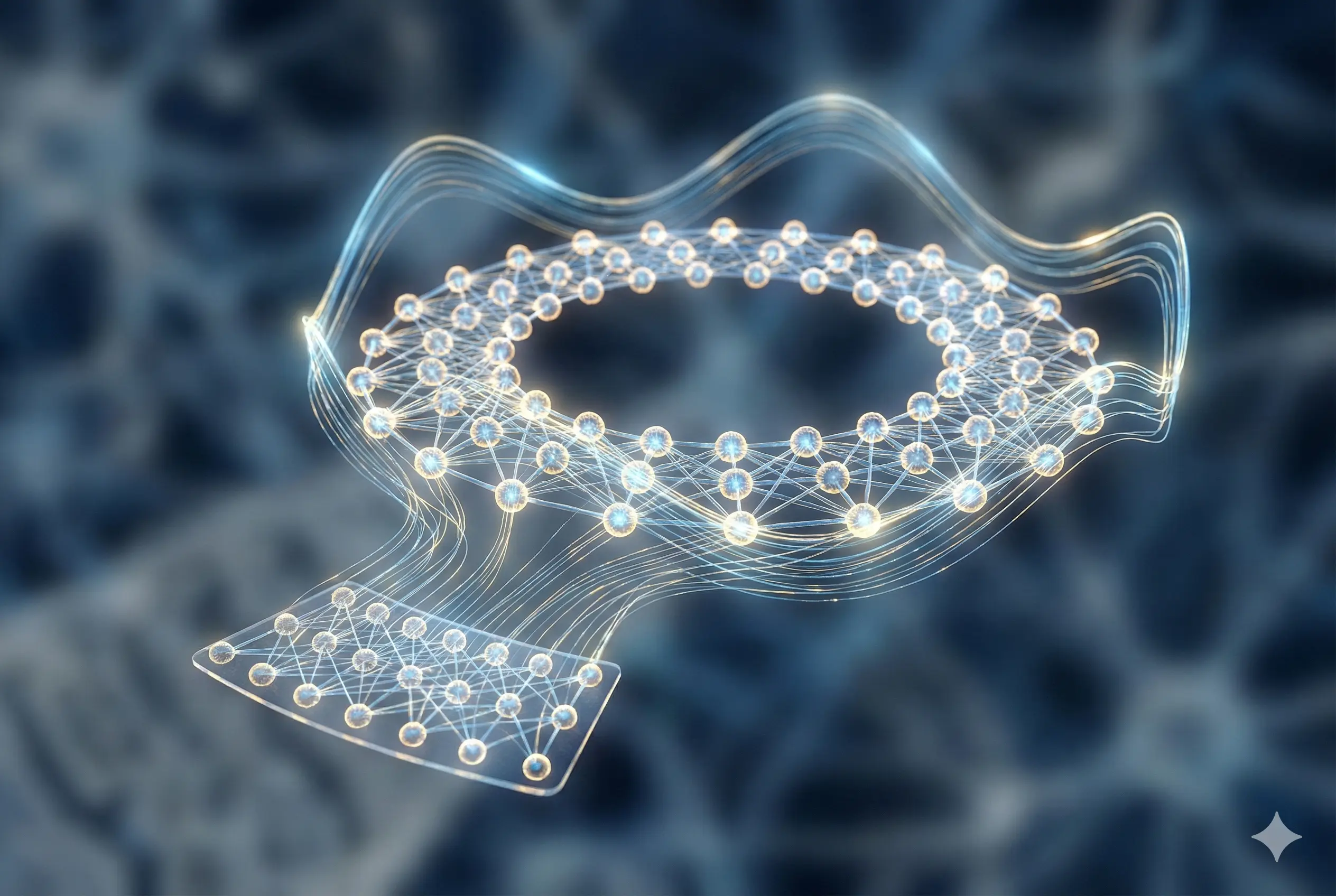

Structural Abstraction and the TEM

- The Tolman-Eichenbaum Machine learns latent relational structure shared across both spatial and non-spatial tasks.

- The grid cells represent an allocentric representation of the environment, by performing path integration and a common structural code to generalize between environments.

- The place map is a “where-map” between locations and entities within the environment, and rather than being tied to a specific sensory input (as in a predictive mapping) encodes the relationship between various locations in a graph-like structure.

- Thus grid-like “g-cells” and conjunctive “p-cells” (items × locations) allow for flexible relational inference.

- The conjunctive “prior” is initially learned from the inference step, and is updated through a generative process where the agent explores the environment.

- Emergent cell types: Tolman-Eichenbaum machines account for a host of cell types, including grid, band, border, and object-vector-like units.

Limitations of the TEM

- Struggles with aliased sensory inputs (same cues in different places).

- No compositional or segmental reuse of learned structure.

- Operates in allocentric (map-based) rather than egocentric (self-centered) reference frames, thus failing at egocentric generalization.

Biological Disentanglement and Theoretical Connections

- Memory retrieval in the TEM is mathematically analogous to the transformer self-attention mechanism, as shown in the table below.

| TEM | Transformer |

|---|---|

- Attention-based mechanisms may unify neural and artificial models of memory.

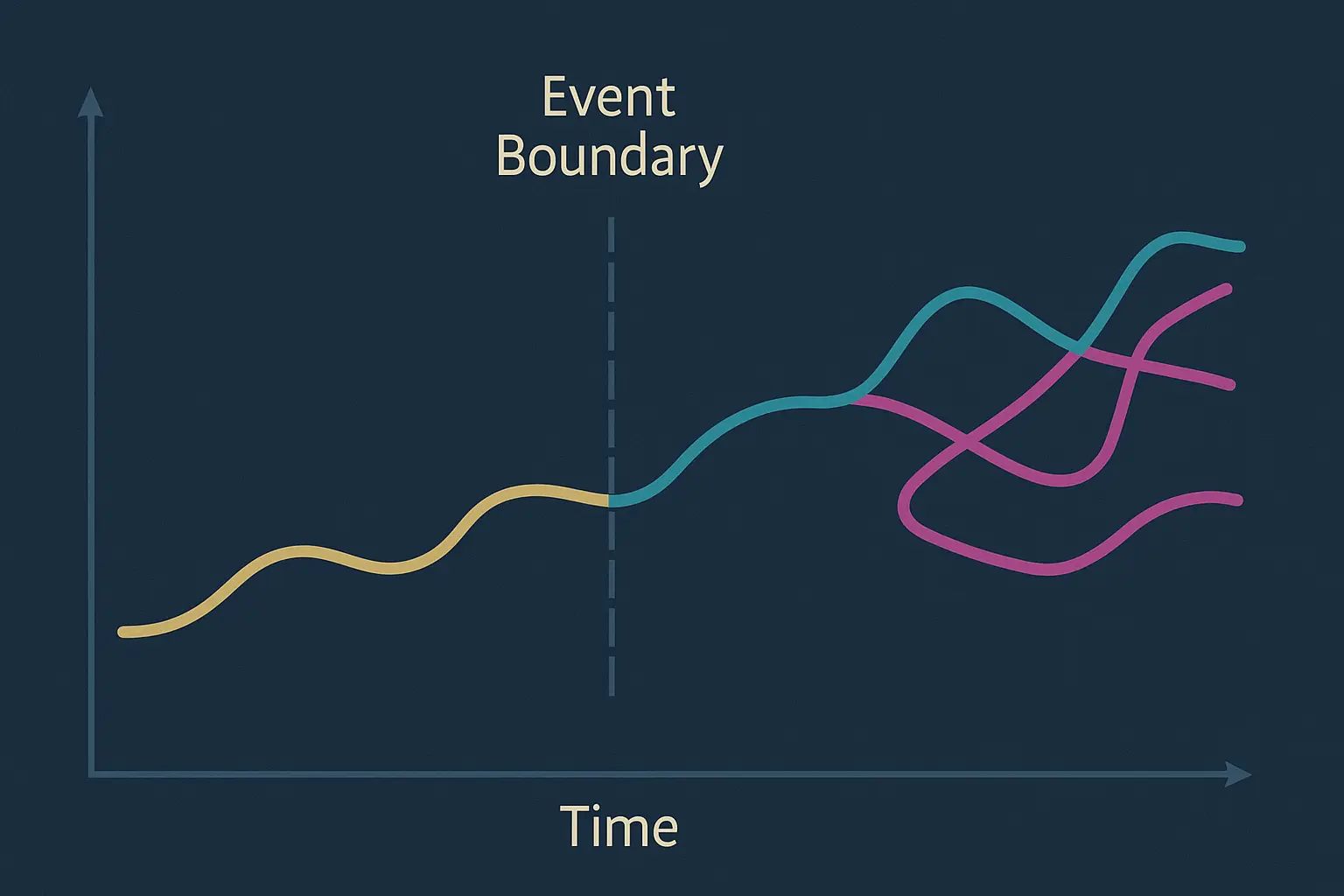

Learning from Sequences: CSCG

- Models the hippocampus as a cloned Hidden Markov Model (HMM) with action-conditioned transitions.

- Learns context-specific structure, even with aliased observations. (i.e. same sensory stimuli in different contexts)

- Disambiguates repeated observations across episodes, allowing for egocentric generalization.

- Enables map stitching and place field remapping, mirroring experimental findings. (i.e. allowing for the reuse of learned structure in new contexts)

Limitations of CSCG

- Biologically unlikely compared to the TEM, and does not account for grid cell distribution.

Take Home Message

Hippocampal circuits serve as contextual, predictive, and relational memory engines, integrating spatial, relational, and sequential information. While models like SR, TEM, and CSCG each explain some hippocampal phenomena, no single framework yet captures the full diversity and flexibility of memory in context—a major ongoing challenge in neuroscience and AI.

Key Discussion Points

Biological plausability and Model Performance : The speaker explained that the TEM model’s limitations, such as its reliance on an allocentric view and its inability to handle aliasing, restrict its problem-solving capabilities. However, it was also noted that a model’s biological plausibility doesn’t always correlate with its performance, and that performance alone is not a good indicator of scientific value.

The Role of Computational Neuroscience: The speaker emphasized that true understanding of the brain requires more than just replicating its behavior; it involves breaking down the system into functional modules and understanding their interactions. While computational models can provide quantitative measures for diagnosis and tracking, these measures cannot explain the underlying mechanisms so far.

Non-linearity in models: A question was raised about the apparent linearity of the TEM compared to transformers, considering it does not contain a non-linear activation function (

softmax). The speaker explained that adding the non-linearity to the TEM model improved its learning speed in Whittington et al. (2022), highlighting the complementary relationship between biological inspiration and mathematical functions.

Original Video

References

- 1

- 2

- 3

- 4