November 2025

Unifying computational principles underly flexible cognition—from the orbitofrontal cortex to compositional task representations, working-memory–habit mixtures, and recurrent network subspaces.

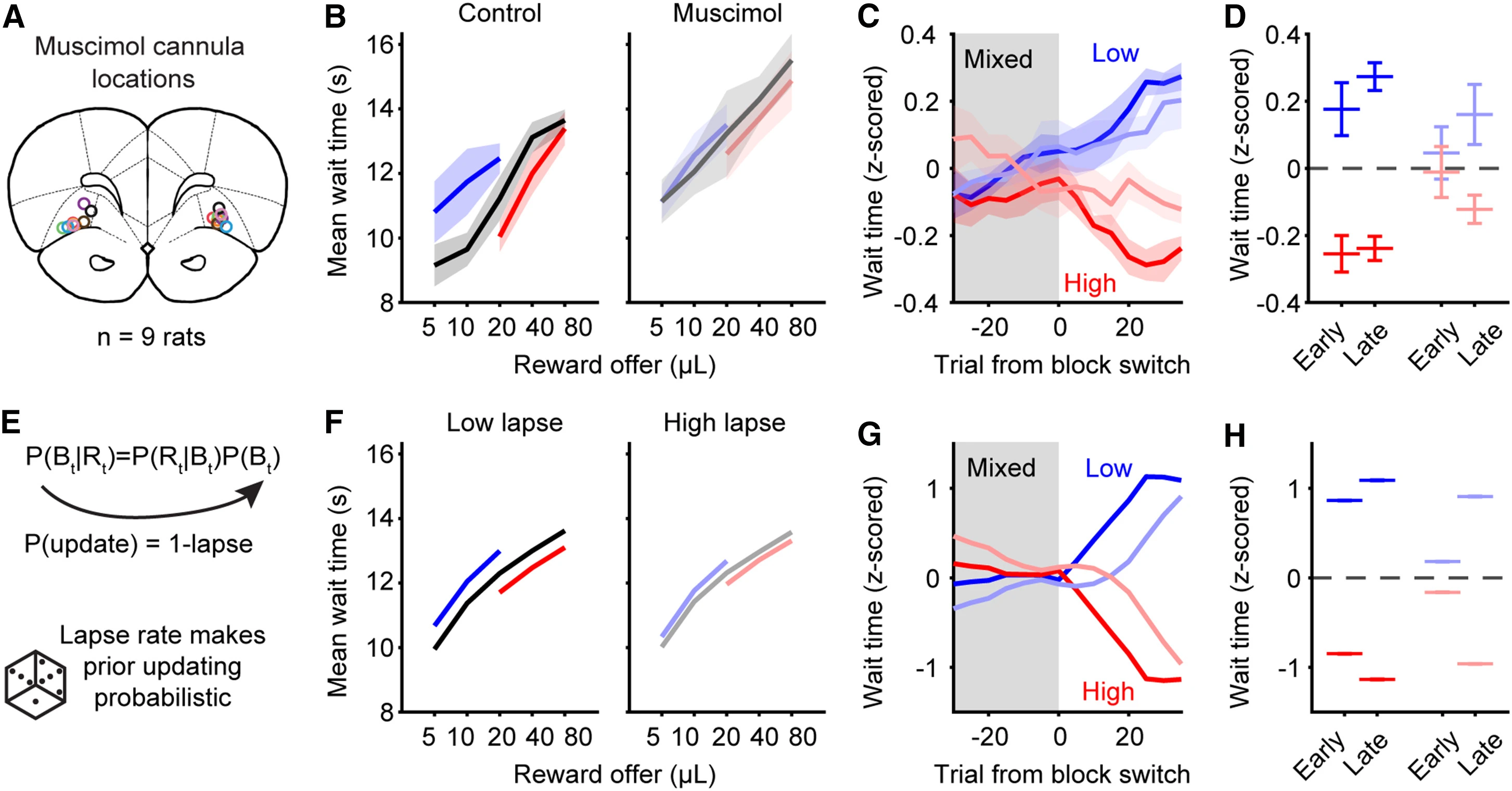

The orbitofrontal cortex updates beliefs for state inference

- Core Discovery: The orbitofrontal cortex (OFC) performs belief updating for inferring hidden states in dynamic environments.

- Method: Rats performed a temporal wagering task with latent reward blocks. Neural and behavioral evidence showed that expert rats inferred block transitions rather than adapting via reinforcement alone.

- Findings:

- OFC inactivation (via muscimol) impaired belief updating and slowed behavioral adaptation without affecting reward valuation.

- Population dynamics (via hierarchical LDS modeling) revealed slow latent factors reflecting inferred states distinct from trial-by-trial reward signals.

- Early in training, rats relied on divisive normalization, but transitioned to true inference as OFC representations matured.

- Conclusion: OFC supports recursive Bayesian updating of subjective beliefs—providing a mechanistic link between neural population dynamics and probabilistic reasoning under partial observability.

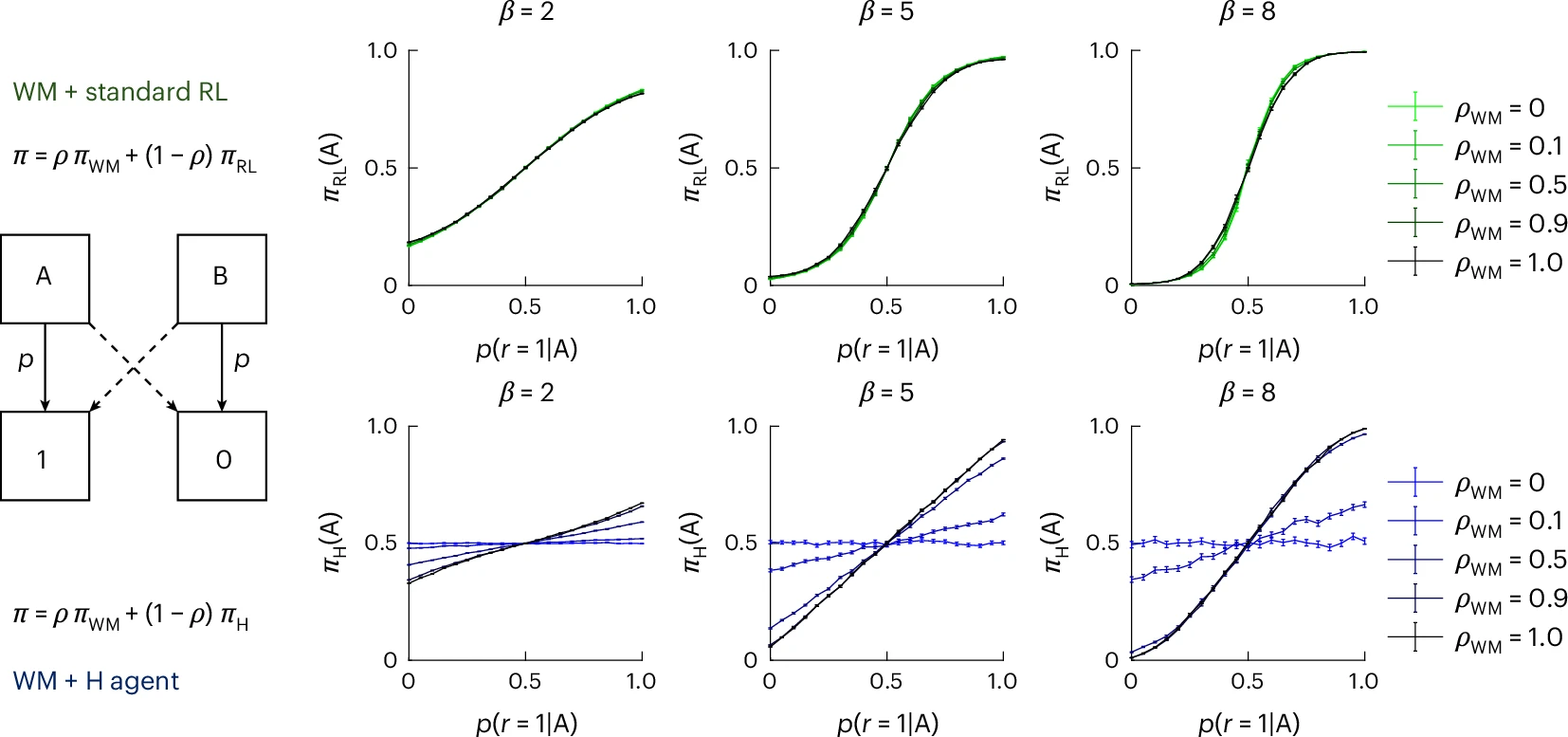

A habit and working memory model as an alternative account of human reward-based learning

- Objective: To test whether human “reward-based learning” actually reflects reinforcement learning (RL) computations.

- Approach: Meta-analysis and computational modeling across seven large datasets (n=594) using the RLWM paradigm that separates working memory (WM) from slower processes.

- Results:

- Behaviour is best explained by a mixture of WM and a habit-like (H) process, not standard RL.

- The WM process: fast, capacity-limited, and sensitive to positive/negative feedback.

- The H process: slow, outcome-insensitive, Hebbian-like association between stimulus and action.

- Together, they mimic an RL agent’s policy but lack reward prediction error computation.

- Implications: Traditional RL models over-attribute learning to value-based computation, when in fact working memory and habit systems jointly drive behavior, reframing dopaminergic and striatal “RL signals” as emergent from mixed non-RL processes.

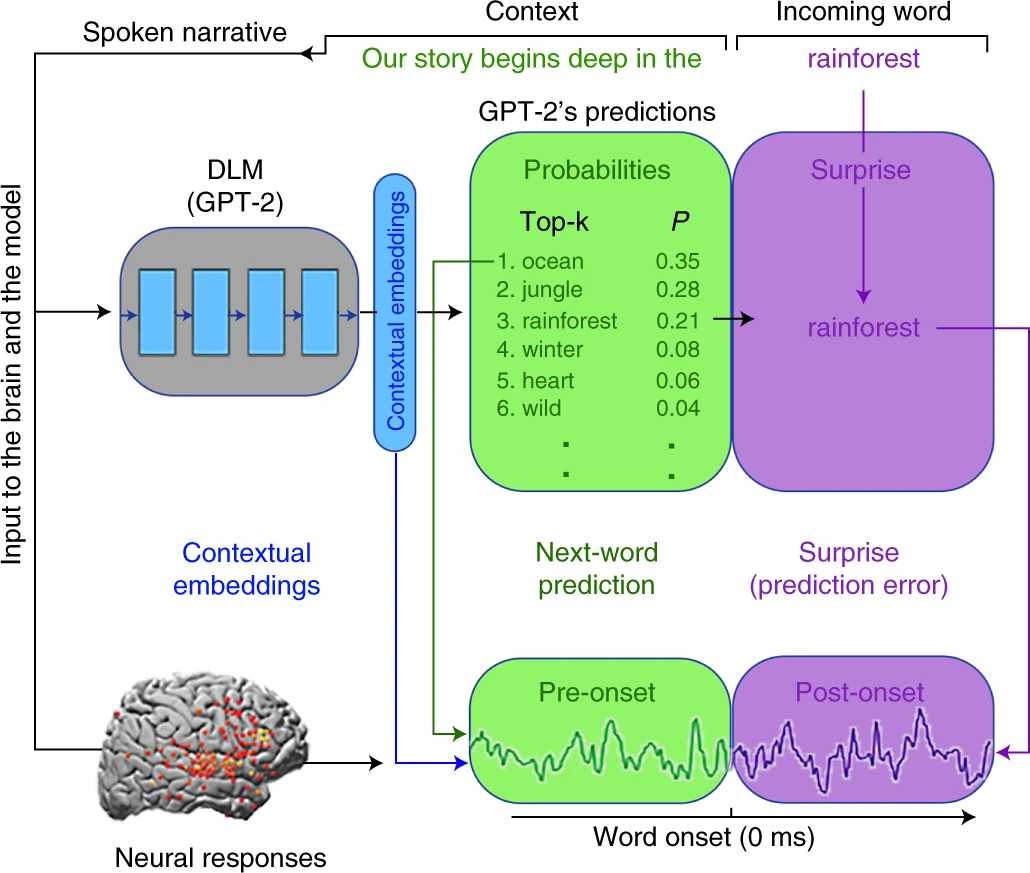

Shared computational principles for language processing in humans and deep language models

- Scope: This perspective synthesizes parallels between brains and artificial networks, identifying shared design principles.

- Key Themes:

- Representation learning: Both brains and ANNs optimize internal representations for predictive efficiency and energy constraints.

- Credit assignment: Synaptic plasticity and gradient-based optimization converge toward biologically plausible local learning rules (e.g., dendritic backprop proxies).

- Compositionality and modularity: Cortical microcircuits mirror transformer-like hierarchical compositional architectures, supporting transfer and few-shot learning.

- Stochasticity and exploration: Neural noise and dropout regularization both enable robust generalization under uncertainty.

- Framework Proposal: A “shared objective hypothesis”—that both biological and artificial systems aim to minimize future surprise via predictive coding and efficient resource use, positioning the brain as a self-regularizing, energy-constrained learner.

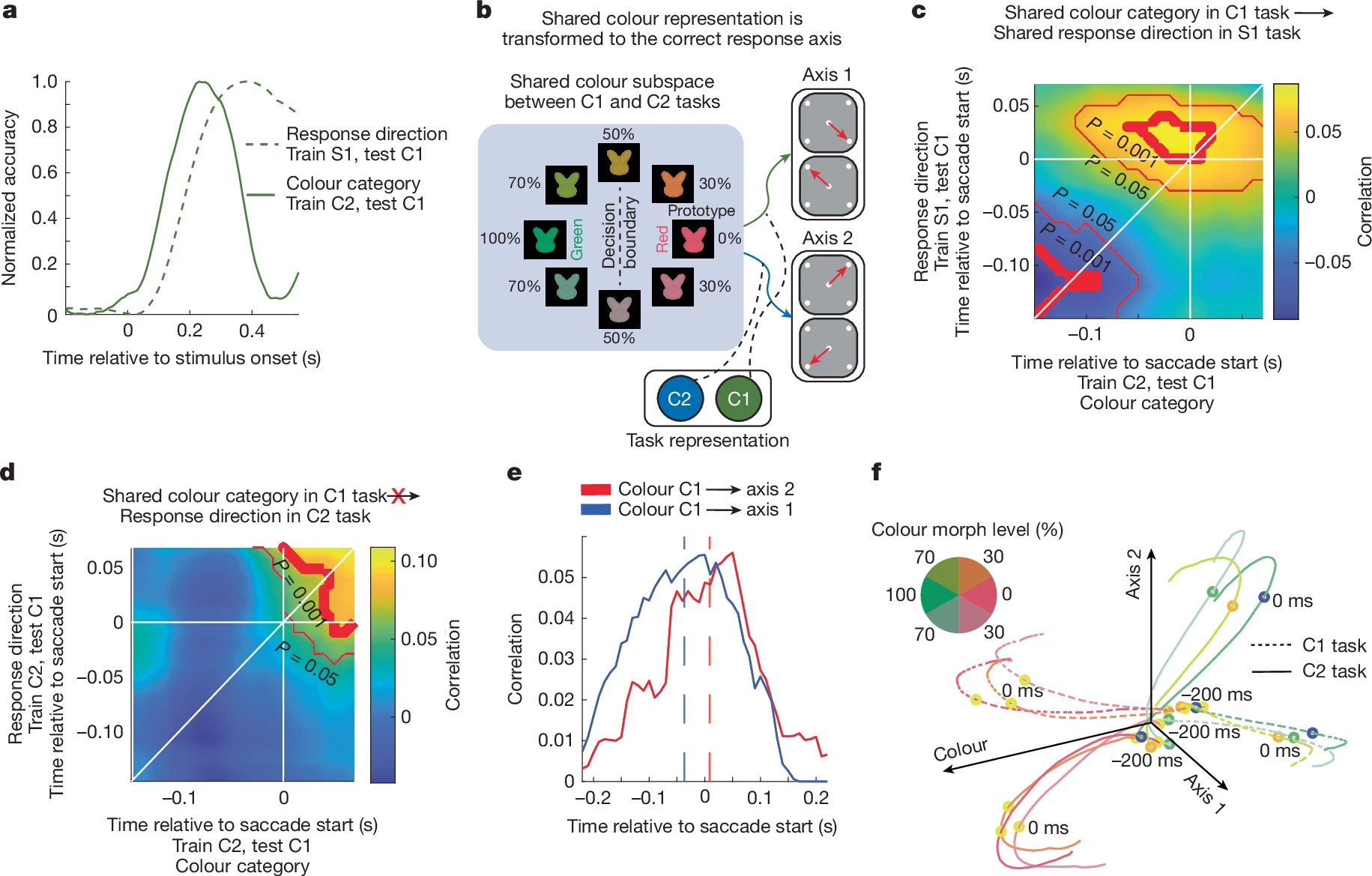

Compositional tasks with subspace structure in recurrent neural networks

- Central Question: How do neural systems represent compositional tasks—combining operations like “add” + “subtract” or “left” + “right”?

- Model: Recurrent neural networks (RNNs) trained on compositional sensorimotor tasks spontaneously organize their activity into low-dimensional subspaces corresponding to task components.

- Findings:

- Each task variable (rule, stimulus, response) occupies an orthogonal subspace, enabling linear composition into novel tasks.

- This structure mirrors factorized representations in biological neural data (PFC, parietal cortex).

- Network geometry predicts generalization: the more orthogonal the subspaces, the greater the compositional flexibility.

- Implication: Both artificial and biological recurrent circuits implement subspace-based modularity, allowing reuse of neural trajectories for flexible task recombination—linking population geometry to the algorithmic foundations of abstraction.

References

- 1

- 2

- 3

- 4