June 2025

How Tiny RNNs, Inter-Brain Synchrony, and a Foundation Model Are Transforming Our Understanding of Cognition

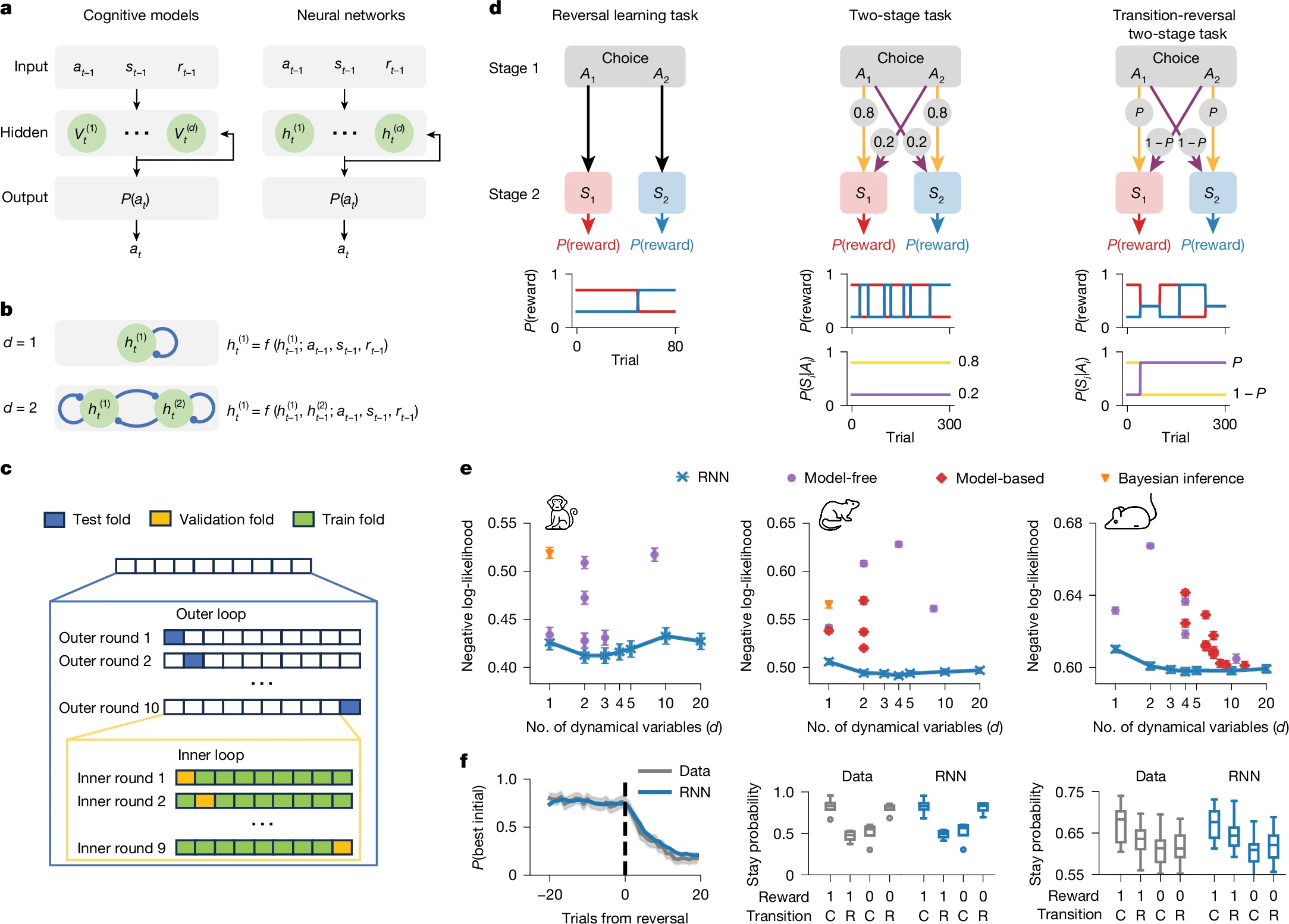

Discovering cognitive strategies with tiny recurrent neural networks

- Introduces a modeling approach using very small (1-4 units) recurrent neural networks (RNNs) to discover interpretable cognitive strategies in animal and human reward-learning tasks.

- Tiny RNNs outperform classical cognitive models in predicting choices, revealing novel behavioral patterns (e.g., variable learning rates, state-dependent perseveration) that are missed by standard models.

- The trained RNNs are interpretable through dynamical systems analysis, enabling visualization and comparison with classic cognitive theories.

- The framework allows dimensionality estimation of behavior and provides insights into both biological cognition and AI meta-reinforcement learning.

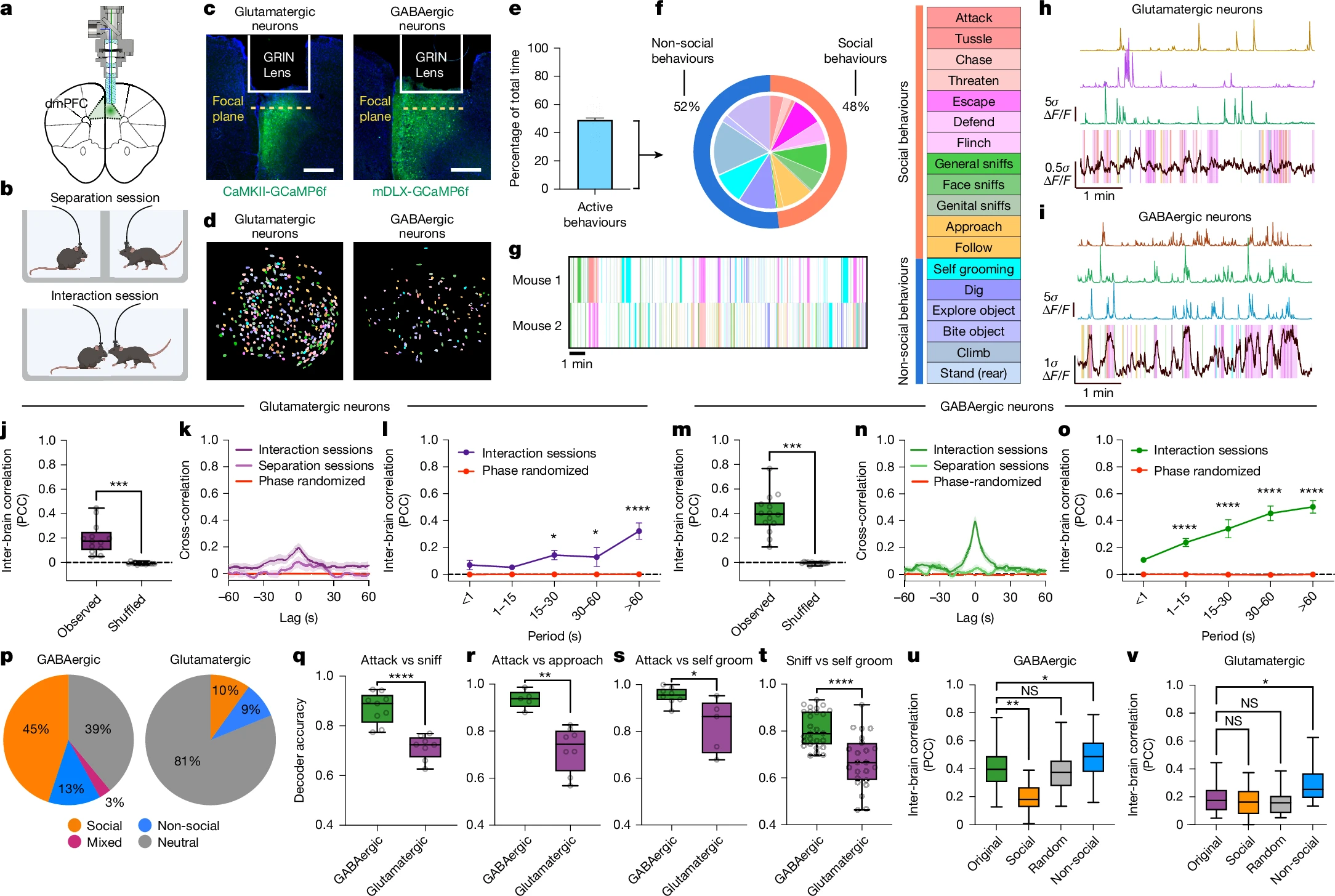

Inter-brain neural dynamics in biological and artificial intelligence systems

- Examines how neural dynamics during social interaction can be partitioned into “shared” and “unique” subspaces in both mice and artificial agents.

- Finds GABAergic neurons in the dorsomedial prefrontal cortex (dmPFC) display significantly greater shared neural dynamics across brains than glutamatergic neurons, especially during social behavior.

- Disruption of shared neural subspace components impairs social interactions in both biological and artificial systems.

- Reveals that shared neural dynamics are a generalizable feature driving social behavior in interacting agents (biological or AI).

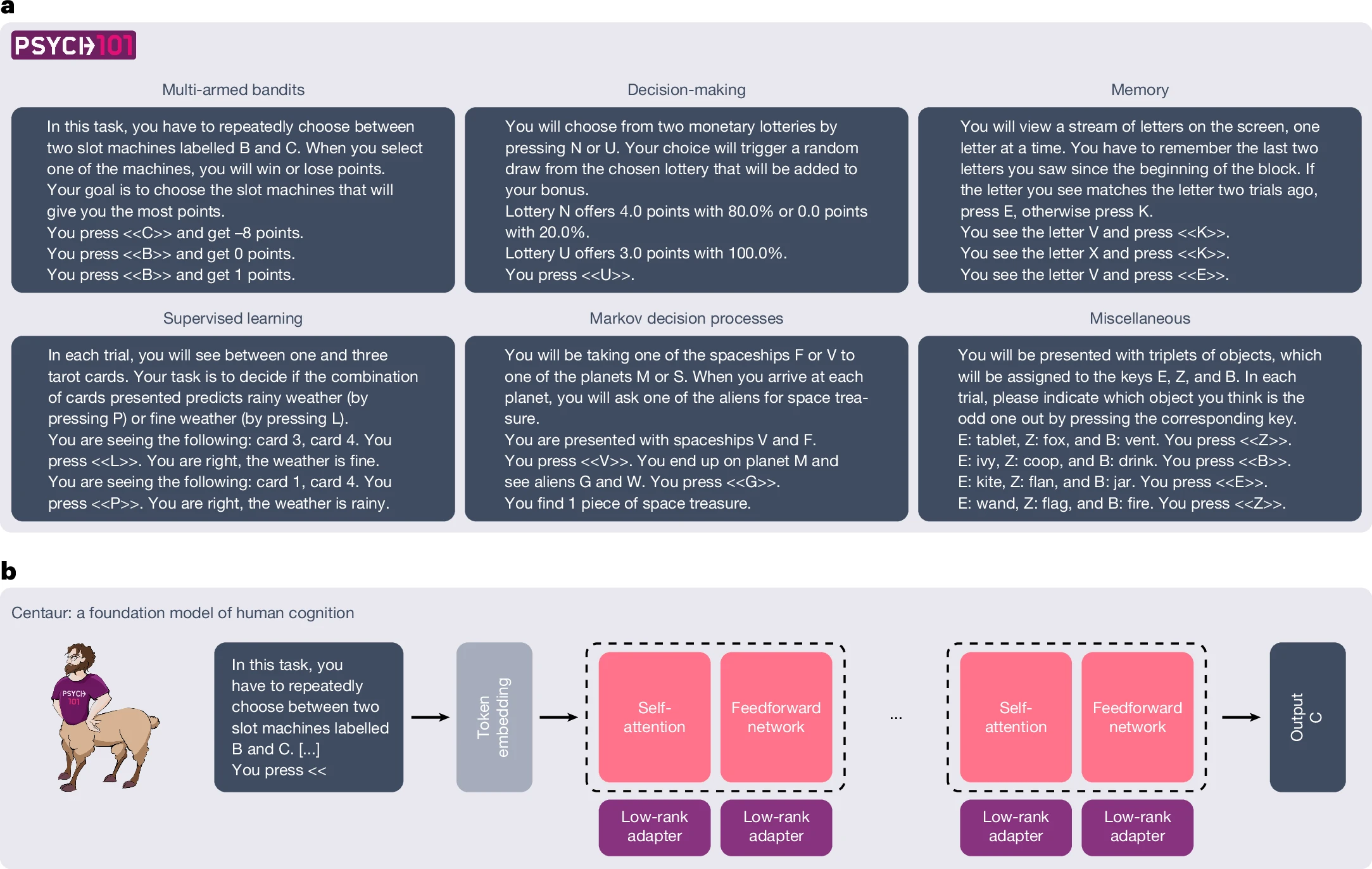

A foundation model to predict and capture human cognition

- Introduces “Centaur,” a large foundation model (fine-tuned Llama 3) trained on Psych-101, a massive dataset of trial-level human cognitive/behavioral data from 160 experiments.

- Centaur predicts human choices better than both base LLMs and domain-specific cognitive models, even in new tasks and domains, and its representations align more closely with human brain activity after tuning.

- Demonstrates strong generalization: can simulate human-like behavior with only natural language descriptions of tasks.

- Provides a platform for testing, falsifying, and refining cognitive theories at scale.

References

- 1

- 2

- 3